Student Investigations Lab Project

A team of Lab researchers developed a novel methodology to study inequities in the U.S. asylum process. The student team compiled and analyzed nearly 6 million U.S. immigration court proceedings across a range of 228 case features.

They found that the decision of U.S. immigration courts to either grant or deny a refugee’s request for asylum is often heavily influenced by factors extraneous to the underlying facts of the case. The most significant of these factors were the dominant political climate of the state where the asylum court sat, and the individual fluctuations of the presiding judge. These factors accounted for nearly 60% of total decision variability, meaning that these circumstances — which have nothing to do with the merits of an asylum case — can play a significant role in how a presiding asylum judge will rule. Raman and Vera presented these findings at the Association for Computing Machinery 2022 Conference on Equity and Access in Algorithms, Mechanisms, and Optimization. Their research was honored with an Outstanding Paper Award.

Partners

Research Outcome

Three students produced a study introducing a novel two-pronged scoring system to measure individual and systemic bias in immigration courts under the U.S. Executive Office of Immigration Review (EOIR). They analyzed nearly 6 million immigration court proceedings and 228 case features to build on prior research showing that U.S. asylum decisions vary dramatically based on factors that are extraneous to the merits of a case. Using predictive modeling, they explained 58.54% of the total decision variability using two metrics: partisanship and inter-judge cohort consistency. Thus, whether the EOIR grants asylum to an applicant or not depends in majority on the combined effects of the political climate and the individual variability of the presiding judge — not the individual merits of the case. Their contributions expose systemic inequities in the U.S. asylum decision-making process, and they recommend improved and standardized variability metrics to better diagnose and monitor these issues.

News Outcome

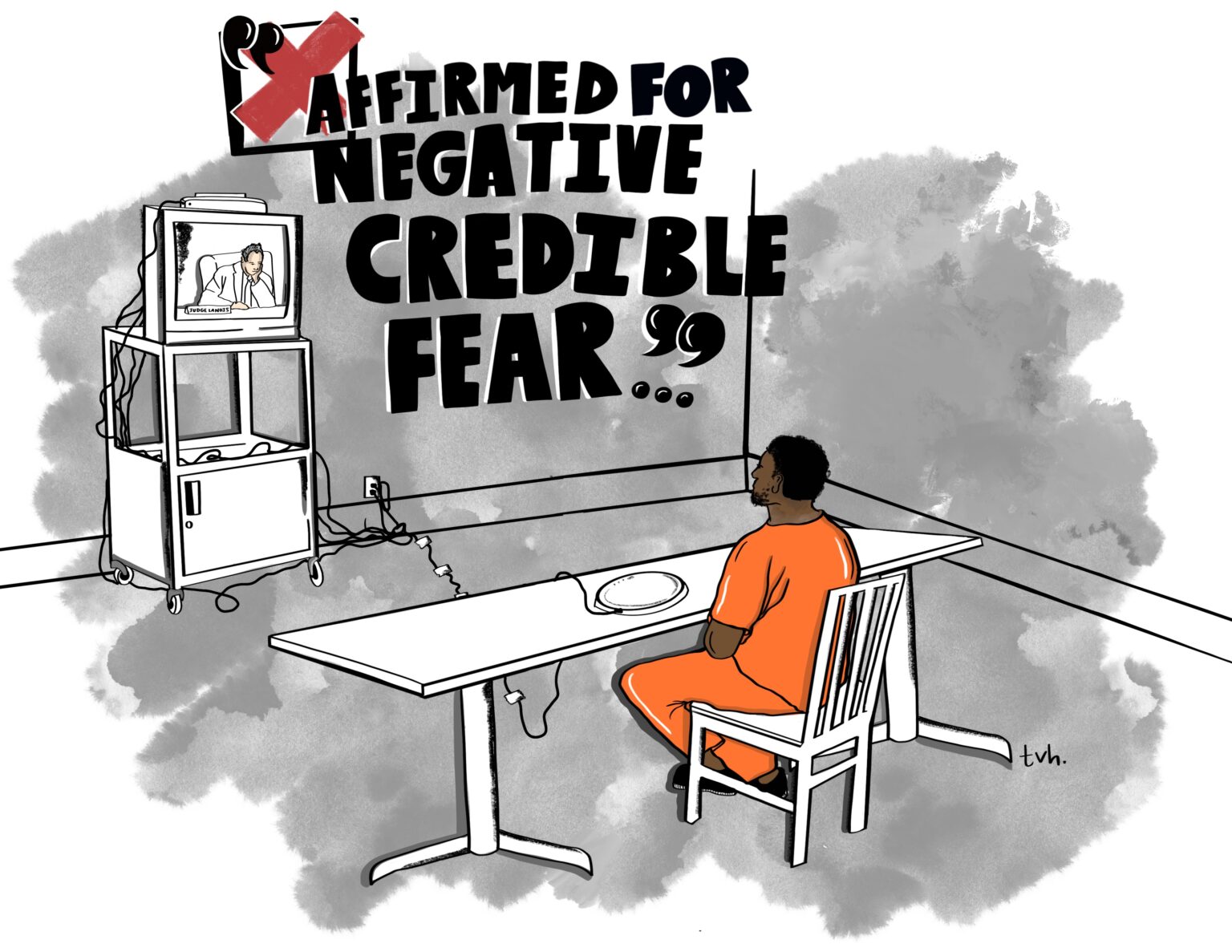

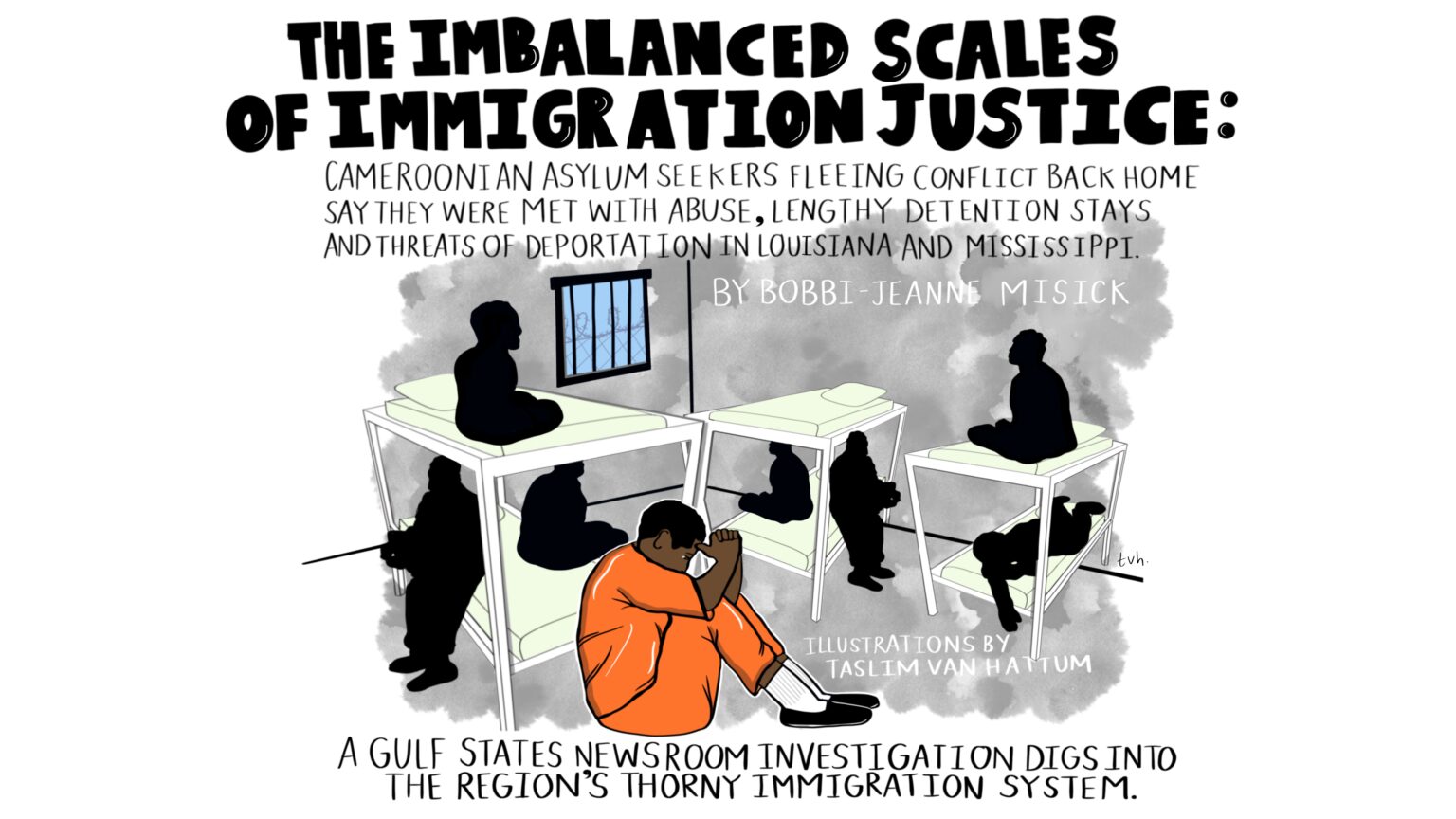

Gulf States Newsroom and Type Investigations published a major news feature that relied partly on data analysis by the Lab team led by Vyoma Raman, Catey Vera, and C.J. Manna. Reporter Bobbi-Jeanne Misick told the story of Cameroonians languishing in United States detention centers after it was determined that the fears prompting their seeking asylum in the U.S. were not “credible.” With initial data assembly and interpretation provided by the HRC Investigations Lab, Gulf States Newsroom and Type Investigations analyzed immigration courts’ asylum decisions between fiscal years 2018 and 2021.

Student Research Presented at Conference

Investigations Lab team leaders Vyoma Raman and Catey Vera presented their research, also completed with C.J. Manna, at the 2022 ACM conference on Equity and Access in Algorithms, Mechanisms, and Optimization (EAAMO). They were awarded an Outstanding Paper Award for their work.

Team Acknowledgements

Investigation Leads: Vyoma Raman, Catherine Vera, and C.J. Manna

Staff Advisors: Alexa Koenig, Sofia Kooner, and Stephanie Croft

News

October 7, 2022

Can machine learning measure the impact of bias on U.S. asylum decisions?

Commentary — #Verified by the Human Rights Center: Can machine learning measure the impact of bias on U.S. asylum decisions? Authored by Vyoma Raman and Catey Vera.

December 19, 2022